IPAdapting myself

There's a tool that Ai Generators know well : IPAdapter. Created by Tencent Ai Lab's team, its purpose is to make image referencing easier in a generative image workflow. With it you can basically copy and use general style, pose, composition, subject to influence your text prompt.

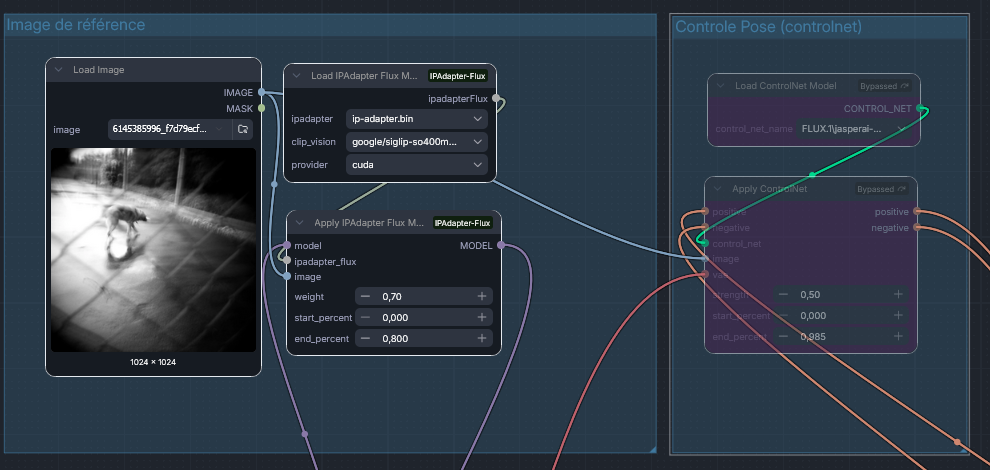

In my workflow here's the part that manages the IPAdapter :

You can see that there is a bypassed ControlNetwork next to it : that works as well. The initial idea, and the various uses are well explained on their github. Obviously, there are a few prerequisites :

- the bin file should be one compatible with the loaded model

- use a specific clip file, following developer's recommendation

- same for the ControlNet part

- do not use very high values : you should learn the required mini and max values in the doc files and play in that range

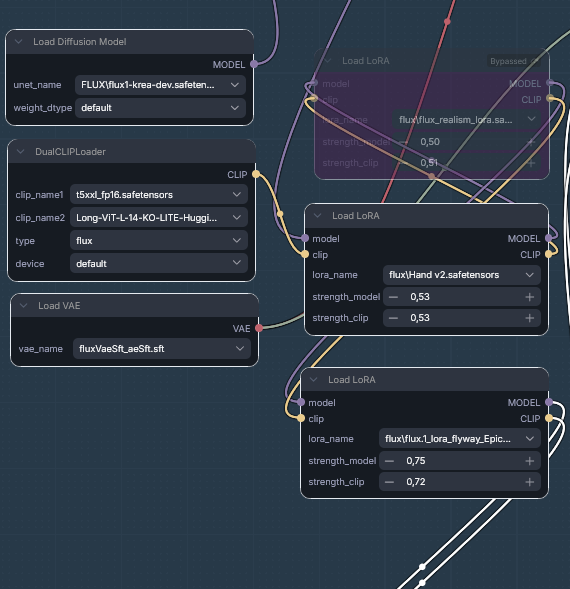

Of course, it should be plugged to the expected part where you load your model. Here I use Flux1Dev or derivated models and loras, so it is expected not to have negative prompt but a ConditioningZeroOut node instead, plugged out of your text prompt and to your the negative input of your KSampler.

It raises of course a lot of questions and raised eyebrows regarding the copyright. To date, there are laws protecting artists but they are either very recent or incomplete. Again, this is just a tool, and I choose to use it on myself. After all, Wahrol use to consider art pieces as products, naming his studio "The Factory" and establishing a relationship between producing art and massively selling it. That's why I'm choosing to use this on my own stuff. Not sure of what's coming out of this, but that interests me a lot, and that is a good experiment too.

So be it, then.

Member discussion